Artificial intelligence (AI) and machine learning techniques known as “deep learning” are designed to mimic how people study specific subjects. Statistics and predictive modeling are two crucial components of data science, which also includes deep learning. Deep learning makes the process of gathering, analyzing, and interpreting massive volumes of data much faster and simpler, which is very advantageous to data scientists who are tasked with doing so. Deep learning can be viewed as a means to automate predictive analytics at its most basic level. Deep learning algorithms are piled in a hierarchy of increasing complexity and abstraction, as opposed to conventional machine learning algorithms, which are linear.

Think of a young child whose first word is “dog” to get the concept of deep learning. By pointing at things and saying “dog,” the toddler learns what a dog is and is not. If a dog is present, the parent will either confirm it or deny it. The toddler’s awareness of the characteristics shared by all dogs grows as he continues to point to various items. The child builds a hierarchy in which each level of abstraction is constructed with knowledge that was obtained from the prior layer of the hierarchy, clarifying a complex abstraction — the concept of dog — without even realizing it. Deep learning computer programs go through a similar process to a young child learning to recognize a dog. Each algorithm in the hierarchy performs a nonlinear transformation on the data it receives as input before using what it discovers to produce a statistical model as an output. Till the output is accurate enough to be relied upon, iterations are performed. Deep was given its name because of the quantity of processing layers that data must go through.

In typical machine learning, the learning process is supervised, and the programmer must be very explicit when instructing the computer what kinds of things to search for to determine whether or not an image contains a dog. The programer’s ability to precisely describe a feature set for dogs will have a direct impact on the computer’s success rate throughout this painstaking procedure known as feature extraction. The benefit of deep learning is that the system develops the feature set independently and without supervision. Along with being quicker, unsupervised learning is frequently more accurate. The computer software may initially be given training data, such as a collection of photographs for which each image has been tagged with metatags to indicate whether it is a dog image or not. The computer develops a feature set for the dog and a predictive model using the information it learns from the training data. In this scenario, the computer’s initial model might suggest that anything in the image with four legs and a tail should be classified as a dog. Of course, neither the labels “four legs” or “tail” are known to the software. It will merely scan the digital data for pixel patterns. The predictive model improves in complexity and accuracy with each iteration.

A computer software that employs deep learning algorithms can filter through millions of photographs after being shown a training set, precisely detecting which images have dogs in them in a matter of minutes. In contrast to a child, who will need weeks or even months to acquire the notion of dog. Deep learning systems need access to enormous amounts of training data and processing power in order to attain an acceptable degree of accuracy. Until the age of big data and cloud computing, neither of these resources was readily available to programmers. Deep learning programming is able to produce precise predictive models from enormous amounts of unlabeled, unstructured data because it is capable of producing complicated statistical models directly from its own iterative output. This is crucial as the internet of things (IoT) spreads further because the majority of data generated by people and devices is unstructured and unlabeled.

Deep learning techniques

Strong deep learning models can be produced using a variety of techniques. These methods include dropout, learning rate decay, transfer learning, and starting from scratch.

Learning rate decay:- The learning rate is a hyperparameter that regulates how much change the model undergoes in response to the predicted error each time the model weights are changed. A hyperparameter is a component that defines the system or establishes conditions for its operation before the learning process. A suboptimal set of weights may be learned or unstable training processes may be the result of excessive learning rates. A protracted training procedure with the possibility of being stuck can result from learning rates that are too slow. The technique of adjusting the learning rate to improve performance and shorten training time is known as the learning rate decay method, also known as learning rate annealing or adaptable learning rates. Techniques to slow down learning rate over time are among the simplest and most popular adaptations of learning rate during training.

Transfer learning:- This technique entails refining a model that has already been trained, and it calls for access to a network’s internal workings. Users first add new data including previously unidentified classifications to the already-existing network. Once the network has been modified, new jobs can be carried out with more accurate categorization skills. The benefit of this approach is that it uses a lot less data than others, which cuts down computation time to minutes or hours.

Training from scratch:- An extensive labeled data set must be gathered for this method, and a network architecture that can learn the features and model must be put up. Apps with a lot of output categories and new applications can both benefit greatly from this strategy. However, because it requires a lot of data and takes days or weeks to train, it is often a less popular strategy.

Dropout:- This approach makes an effort to address the issue of overfitting in neural networks with a lot of parameters by randomly removing units and their connections during training. The dropout strategy has been demonstrated to enhance neural network performance on supervised learning tasks in domains like speech recognition, document categorization, and computational biology.

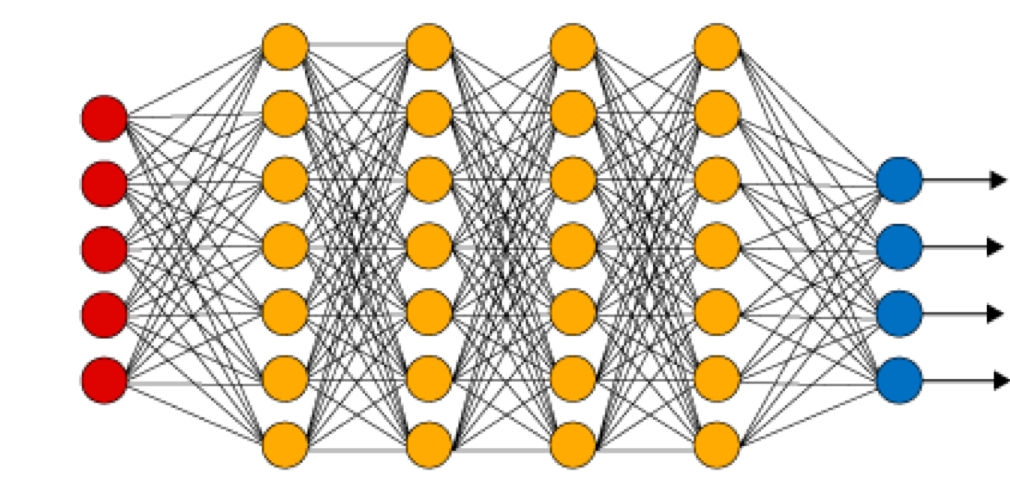

The majority of deep learning models are underpinned by an artificial neural network, a sort of sophisticated machine learning algorithm. Deep learning is hence also known as deep neural learning or deep neural networking.

Each type of neural network, such as feedforward neural networks, recurrent neural networks, convolutional neural networks, and artificial neural networks, has advantages for particular use cases. However, they all work somewhat similarly in that data is fed into the model, and the model then decides for itself whether or not it has made the correct interpretation or judgement for a particular data element. Since neural networks learn by making mistakes, they require enormous volumes of training data. It’s no accident that neural networks only gained popularity after most businesses adopted big data analytics and gathered enormous data repositories. The data used during the training stage must be labeled so the model can determine whether its informed estimate was correct because the model’s initial iterations entail making educated guesses about the contents of an image or sections of speech. This indicates that even though many businesses using big data have a lot of data, unstructured data is less useful. Deep learning models cannot train on unstructured data, so they can only analyze unstructured data once it has been trained and has attained an acceptable degree of accuracy.

Deep learning models can be used for various tasks since they process information similarly to how the human brain does. The majority of widely used speech recognition, natural language processing (NLP), and image recognition programs presently use deep learning. Applications as diverse as self-driving cars and language translation services are beginning to use these tools.

All forms of large data analytics applications, particularly those centered on NLP, language translation, medical diagnosis, stock market trading signals, network security, and picture identification, are today’s use cases for deep learning. The following are specific fields where deep learning is currently used:

- Customer experience:- Chatbots are already using deep learning algorithms. Deep learning is also anticipated to be used in a variety of organizations to enhance Customer experience and boost customer happiness as it continues to develop.

- Text generation:- The grammar and style of a piece of writing are taught to machines, who then use this model to automatically create a new text that accurately matches the original text’s spelling, grammar, and style.

- Military and Aerospace:- Deep learning is being used to identify satellite-detected objects that indicate interest areas and military safe or unsafe zones.

- Automated manufacturing:- By offering services that automatically recognize when a worker or object is approaching too close to a machine, deep learning is enhancing worker safety in settings like factories and warehouses.

- Medical study:- Deep learning has begun to be used by cancer researchers in their work as a technique to automatically identify cancer cells.

Difficulties of Deep Learning

The primary drawback of deep learning models is that they only learn from observations. They therefore only have knowledge of the information contained in the training data. The models won’t learn in a way that can be generalized if a user only has a small amount of data or if it originates from a single source that is not necessarily representative of the larger functional area. Biases are a significant concern for deep learning models as well. When a model is trained on biased data, the predictions it makes will reflect those biases. Models learn to differentiate based on minute differences in data pieces, which has been a frustrating issue for deep learning programmers. Frequently, it does not make the programmer’s understanding of the crucial factors it decides to be explicit. Deep learning models may face significant difficulties due to the learning pace. The model will converge too quickly if the rate is too high, leading to a less-than-ideal outcome. It may become stuck in the process and be even more difficult to find a solution if the rate is too low. Limitations may also result from deep learning models’ hardware specifications. To ensure increased effectiveness and lower time consumption, multicore high-performing graphics processing units (GPUs) and other processing units are needed. However, these devices are pricey and consume a lot of energy. Random access memory, a hard drive (HDD), or a RAM-based solid-state drive are additional hardware requirements (SSD). The following are examples of restrictions and difficulties:

- Large volumes of data are necessary for deep learning. In addition, stronger and more precise models will demand more parameters, which in turn call for more data.

- Deep learning models are rigid and incapable of multitasking after they have been trained. Only one unique problem can they effectively and precisely solve. Even resolving a similar issue would necessitate system retraining.

- Even with vast amounts of data, the capabilities of existing deep learning approaches are entirely outmatched by any application that needs reasoning, such as programming or using the scientific method.